TL;DR

Most SaaS vendors never respond to their reviews on G2, Capterra, or anywhere else - and most platforms don't even give them the option. We went through every major platform to figure out what's possible: only G2 and Capterra clearly support vendor responses (one charges for it), four platforms have no response mechanism at all, and one is a question mark. We also mapped how disputes work when a review is unfair, and what the responses that actually land have in common. The through-line we kept seeing: the best responses are written for everyone reading after the reviewer.

The Silent Majority Problem (And Why It's Your Advantage)

Go to any B2B software product on G2 or Capterra. Scroll through the reviews. Count how many have a vendor response underneath. On most product pages, the answer is zero. Or close to it.

In B2B SaaS, reviews are treated as a one-way broadcast. Customers write them, vendors collect them for badges and marketing pages, and almost nobody writes back. In restaurants and hotels, responding is standard practice. In software, the silence is the norm - and it's visible.

We checked the sorting capabilities on each platform, and what we found matters: G2 lets buyers sort reviews by star rating - lowest first. TrustRadius sorts by score. Capterra also allows to see the lowest review right away if needed. A savvy buyer sorts reviews from worst to best, and the first thing they see is whether you showed up for the conversation or didn't. An unanswered 1-star review at the top of a sorted list is a statement about how much you pay attention.

A Yelp/Kelton study found that consumers are significantly more likely to look past a negative review when the business has responded. Harvard Business Review research found that responding to reviews correlates with improved ratings over time. Those studies are from the consumer space - B2B-specific data is thinner - but the underlying logic transfers: when you respond, you change the story the page tells.

The Audience That's Starting to Matter

AI systems already pull from G2, Capterra, and SourceForge when evaluating software for recommendations. Today, that mostly means aggregating ratings and pulling review snippets. But these systems are getting smarter - and the direction they're heading matters for how you think about your review pages.

The trajectory is toward AI that can distinguish authentic signal from noise. Systems that can tell the difference between a genuine product experience and a gift-card-motivated 5-star review. Between a company that engages with criticism and one that ignores it. Between marketing language and an honest response to a real problem.

When AI gets good at detecting manipulation and authenticity - and it will - where will it look for signal? Review pages. Third-party platforms where real users describe real experiences, where the vendor's response (or silence) is visible, and where patterns of engagement tell a story that marketing sites can't fake.

A negative review saying "onboarding took three months and support was unresponsive" paired with a vendor response saying "since this review, we've reduced average onboarding to two weeks and added a dedicated onboarding specialist for every account" - that's corrective context. An unanswered negative review is the only narrative available.

Your review responses are mini product updates hosted on high-authority third-party domains. Every thoughtful response is indexable content about your product, written in the context of a real user's experience, on a domain that carries more weight than your own marketing site. The companies building this record now are preparing for an AI landscape that will reward authenticity over volume. We'll come back to this in the section on outdated reviews, because that's where this pays off most.

Who Actually Lets You Respond (And What It Costs)

We went through every review platform to map what's possible. The picture is more limited than it looks: only two platforms - G2 and Capterra - clearly support vendor responses. One charges for it. And four of the seven major platforms appear to have no response mechanism at all.

| Platform | Respond to Reviews? | Cost to Respond | Dispute/Flag Reviews? | Buyer Can Sort by Rating? |

|---|---|---|---|---|

| Capterra | Yes - responses displayed publicly inline | Free | Contact compliance team | Yes - "Highest Rated" sort option |

| G2 | Yes - responses displayed publicly | Paid plan required (~$2,999+/year for small businesses) | "Submit Your Concern" on each review | Yes - by star rating, lowest/highest |

| Trustpilot | Yes | Free (business account) | Formal flag/dispute process | Yes - by star rating |

| TrustRadius | Unclear | Unknown | Contact research team | Yes - by trScore |

| PeerSpot | No response mechanism found | N/A | Community policing + platform moderation | Sorting by rating, reviews, views |

| Gartner Peer Insights | Confirmed: no vendor response option | N/A | Vendor portal support available | Likely filterable (standard Gartner UI) |

| SourceForge | Confirmed: no ability to respond | N/A | No published dispute process found | Unclear |

A few things we found worth flagging:

Capterra is the most visible platform for responses. Responses display publicly inline under reviews, and it's free for any vendor regardless of PPC spend. If you're going to start responding somewhere, start here. Understanding how Capterra badges work helps contextualize why building review engagement matters on this platform.

G2 charges for what Capterra gives away. Responding to your own reviews - the most basic form of engagement with your customers' public feedback - requires a paid G2 subscription. G2 actively encourages vendor responses, but almost nobody does it. For early-stage companies watching their budget, this is a real factor in platform prioritization. If you're investing in G2, understanding how G2 badges work helps you get value from that spend.

TrustRadius is a question mark. We found paying vendors (Freshdesk has both "Profile Claimed" and "Trusted Seller" badges) with zero responses on negative reviews. Either the response feature doesn't exist or nobody uses it. We couldn't determine which. See our TrustRadius badges guide for more on building presence there.

Four platforms have no response mechanism. Gartner Peer Insights, SourceForge, and PeerSpot confirmed: there's no way to respond to individual reviews. We verified GPI by checking Pipedrive's profile - a company that responds everywhere they can - and found no responses even on their 3-star reviews. If you have direct experience that contradicts any of this, reach out and we'll update this guide.

Trustpilot is the one that can surprise you. B2B SaaS companies often overlook Trustpilot because it's associated with consumer businesses - restaurants, e-commerce, travel. But Trustpilot is an open platform where anyone can create a business page and leave a review. Unlike the B2B-focused platforms where vendors create their own profiles, Trustpilot pages can exist and accumulate reviews without the vendor ever knowing. Worth checking whether yours exists. If it does, claim it and set up a free business account so you can respond. Our guide to how Trustpilot recognition works covers what B2B SaaS companies should know about this often-overlooked platform.

These capabilities change. Platforms update their vendor tools regularly. We'll maintain this table as features evolve.

When a Review Feels Unfair: The Dispute Playbook

So you got a 1-star review and something about it seems wrong. Maybe the reviewer is describing a product that isn't yours. Maybe it reads like a competitor planted it. Maybe they're citing a problem that was fixed eighteen months ago.

We went through the dispute processes on every platform and mapped what's possible. But the first honest question is: is this review actually unfair, or is it just uncomfortable?

What We Found Qualifies for a Dispute

The platforms we checked generally agree on what's removable:

- Competitor or employee review - someone with a conflict of interest posing as a customer

- Wrong product - the reviewer is clearly describing a different tool and mistakenly posted on your page

- Factually false claims - specific statements that are demonstrably incorrect (not opinions, not subjective experiences)

- Content policy violations - hate speech, personal attacks, confidential information, promotional content for another product

- Fake/fabricated - the reviewer never actually used your product (platforms with review verification catch more of these upfront)

And they agree on what isn't:

- A low rating from someone who genuinely used your product and didn't like it

- "The UI is confusing" or "support was slow" - these are opinions, even if they sting

- Reviews that are outdated (the fix is responding, not removing)

- A review that hurts your average score

Platforms take dispute abuse seriously. Your credibility with the moderation team matters if you ever need to dispute something real.

How Disputes Work, Platform by Platform

We mapped each platform's process:

G2: Every review has a "Submit Your Concern" option that opens a support ticket with the review moderation team. Tickets are handled within 2 business days, and the reviewer isn't alerted unless G2 takes action. You can flag reviews for bias (employee, competitor, or business partner), misplacement (review belongs on a different product page), or quality issues that violate G2's Community Guidelines. This appears to be available on free listings. (The paid dashboard also offers "vendor challenges" - we haven't been able to confirm whether that's a separate process or the same one.)

Capterra: Contact the compliance team directly. They enforce strict content policy rules and will review flagged content.

TrustRadius: Contact the research team. They will remove employee reviews, competitor reviews, and fake profiles. Their stated policy on authentic reviews: they won't take them down, period.

Trustpilot: The most formalized dispute process of the group - a structured flag system with defined violation categories. Given how easy it is for anyone to post a review, the dispute process here matters more than on platforms with built-in authentication. Reviews that don't violate policy stay up.

Gartner Peer Insights: Contact support through the vendor portal. The specific dispute criteria aren't publicly documented. Since there's no response mechanism, disputing is the only option for problematic reviews.

PeerSpot: Triple-authentication at intake (including LinkedIn profile verification) reduces fakes at the front door. Community policing handles the rest - members can flag suspicious content, and the platform moderates. Learn more in our PeerSpot badges guide.

SourceForge: We found no published dispute process. Your best bet may be contacting their general support, but set expectations accordingly. See our SourceForge badges guide for more on building presence there.

While It's Being Reviewed (Or If It Stays)

A disputed review is still visible while the platform investigates - buyers are still reading it, and on most platforms the investigation takes time, sometimes weeks.

If the platform allows responses, respond to it anyway.

Write your response as if the review will stay forever, because it might. The moderation team may decide it doesn't violate policy. Or they may take weeks to act. Either way, every day that review sits unanswered, it's telling buyers a story you didn't author.

A response to a disputed review needs to be especially measured. Acknowledge the concern, state your perspective calmly, invite an offline conversation. The response itself is evidence of your professionalism - and if the review does get removed, your measured response will already have done its job for everyone who saw it in the meantime.

How to Respond to Negative Reviews

When we looked at the vendors doing this well, one pattern stood out: they're writing for everyone reading after the reviewer.

The person who left a 2-star review has already formed their opinion. You might bring them back - and it's worth trying - but the real value of your response is what it tells the next fifty buyers and AI agents who land on that page.

The Spine That Works

Every good negative review response we found follows the same structure:

Acknowledge → Own what you can → Provide context (not excuses) → Offer a next step → Sign with a name

The emphasis shifts depending on the type of criticism. Here's what we mapped out for each scenario:

Constructive Criticism (They Have a Point)

The highest-value response you'll write. Someone experienced a real problem, described it clearly, and gave you the opportunity to show you're listening.

Acknowledge the specific issue they raised → Thank them for the detail → Share what you've done or are doing about it → Offer a direct channel if they want to continue the conversation

The difference between a good response and a wasted one here comes down to specificity. "We're always working to improve" means nothing. "We shipped a new onboarding flow in January that reduced average setup time by 60%" - that's a response buyers remember.

Bug Reports and Technical Issues

Reviewers sometimes use review platforms to report bugs, especially if they felt support wasn't responsive enough.

Acknowledge the technical issue → Confirm whether it's been resolved (with specifics) → If not resolved, explain where it stands → Provide a direct support channel

Nobody cares why the bug happened - they care whether it's fixed.

Feature Requests Disguised as Complaints

"This product would be great if it had X" written as a 3-star review.

Validate the need → Share whether X is on the roadmap (only if it genuinely is) → If it's not planned, explain honestly → Point to existing alternatives within the product if they exist

Don't promise a feature is "coming soon" if it's not prioritized. The reviewer - and everyone reading - will remember.

"Wrong Product" Reviews

Sometimes reviewers expected something your product doesn't do. "I thought this was a full CRM" for a product that's a lightweight contact manager.

Acknowledge the mismatch without dismissing their experience → Clarify what your product does focus on → Suggest what type of product might be a better fit (generously)

This is positioning work disguised as a review response. You're clarifying your product's identity for every future reader.

Emotional / Angry Reviews

The hardest to respond to. Someone is frustrated and their review reflects it.

Acknowledge the frustration directly → Don't match the energy → Stick to facts about what happened and what's changed → Offer a direct line to a real person → Keep it short

A long, detailed response to an emotional review reads like you're arguing. Keep it shorter than the review itself.

Outdated Reviews (The Problem Was Fixed)

This is where the AI angle from earlier pays off. A review from eighteen months ago complains about a problem you solved six months ago. The review still shows on the page. The old rating still counts in your average. But your response can update the narrative - for human buyers reading today, and for AI systems that will increasingly be able to weigh the original complaint against your correction.

Acknowledge the original issue was real → Confirm when and how it was resolved → Be specific: names of features, dates of releases, metrics of improvement → Invite them to try again

These responses do double duty: they reassure human buyers that the issue is resolved, and they place updated, authentic product information on a high-authority domain - exactly the kind of signal AI systems are learning to value. It's the closest thing to editing a third-party page about your product that you'll ever get.

How to Respond to Positive Reviews

It feels unnecessary - they already said nice things - but we noticed something when we looked across vendor profiles: the ones that respond to positive reviews have more reviews overall. That makes sense. Responding signals to future reviewers that someone actually reads these, and it signals to the platform that you're actively engaged. It also adds more of your voice to the page - building the kind of engagement record that will matter as AI gets better at reading these platforms.

What we found works:

For a detailed positive review (someone who put real effort in):

Thank them specifically for the detail → Reference something specific they mentioned → Share a brief note about what's coming that connects to what they liked → Sign with a name

For a short positive review (a few sentences, good rating):

Quick thanks → Acknowledge what they highlighted → Invite them to share more detail if they'd like → Sign with a name

For a positive review that mentions a minor complaint:

Thank them for the overall feedback → Acknowledge the specific concern (don't skip it) → Briefly note what you're doing about it or why it works that way → Sign with a name

What we saw vendors get wrong:

Copy-pasting identical responses on every positive review - buyers scroll and they notice. Marketing speak - "We're thrilled to hear about your success with our platform!" reads like a bot wrote it. Pivoting to upsell - "Glad you love Feature X - have you tried our Premium tier?" is not a review response. And not signing - a response from "The [Company] Team" is less human than "- Sarah, Customer Success."

The Blastra Review Response Awards

Most review platforms award badges for volume and ratings - how many reviews you collected, how high your average score is. Those metrics matter, but they miss something: whether you actually show up when someone has something to say.

We think the real award goes to the companies willing to respond. So we're giving it. Here's what we found when we looked at actual review pages for several well-known B2B companies.

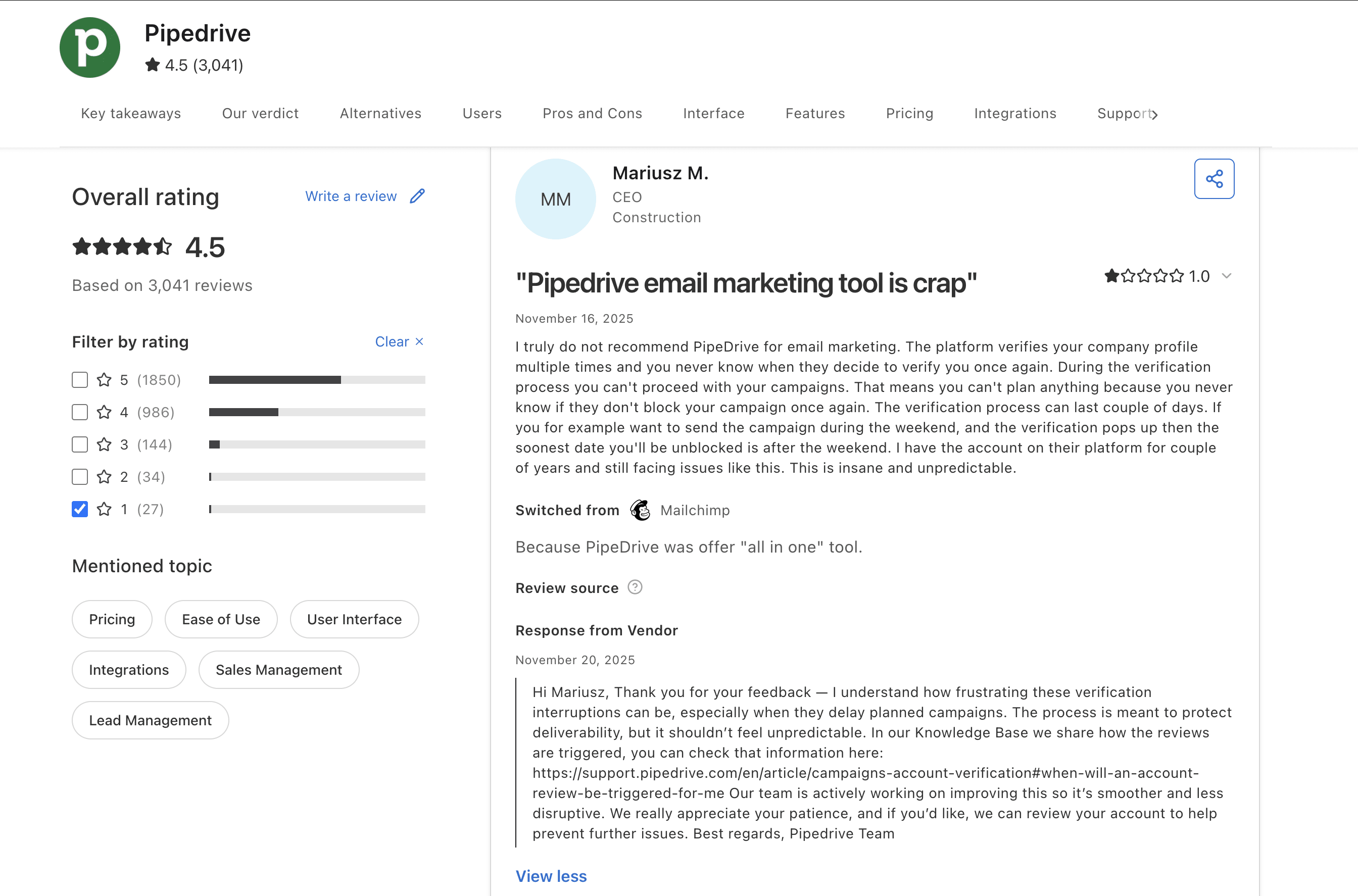

Best (And Nearly Only) Consistent Responder: Pipedrive

Pipedrive responds to reviews on every platform that allows it - G2, Capterra, Trustpilot - with personalized, specific messages that address actual feedback content rather than defaulting to boilerplate. They mention specific team members, and when a reviewer brings up a specific pain point, Pipedrive's response addresses that exact point.

What makes this more interesting: Pipedrive appears to be almost uniquely good at this. While companies with far larger support teams stay silent, Pipedrive shows up consistently across platforms. This is a deliberate practice, and the result is a review page where buyers can see the company is present, listening, and acting.

The Silent Giants

Slack on Capterra - 48 one-star reviews. Zero vendor responses. Complete silence from a company with the resources to respond to every single one.

Zendesk on G2 - 111 one-star reviews, including recent ones with titles like "Fraud Experience" and "frustrating, inefficient, deeply disappointing." No responses. The irony here is hard to miss: a customer support company ignoring customer feedback on public platforms.

Freshdesk on TrustRadius - Paying for "Profile Claimed" and "Trusted Seller" badges, which means they're investing in their TrustRadius presence. Zero responses on negative reviews. Spending on visibility but not on engagement.

These aren't small companies that haven't gotten around to it. These are companies with dedicated support organizations, and the pattern is the same across all of them.

Best Silent Leverager: Wiz

Wiz has 700+ reviews on G2 and 600+ on Gartner Peer Insights, with consistently stellar ratings. We couldn't find vendor responses to individual reviews on any platform we checked.

What they do instead: curate the best review quotes onto a dedicated /reviews page on their own website, turning third-party reviews into first-party marketing content.

This works - when your reviews are overwhelmingly positive. But it comes with two caveats. First, highlighting specific reviews on your website typically requires permissions that depend on your relationship with the platform. Second, this approach is fragile: it works beautifully at 4.7 stars with glowing testimonials, but a shift in sentiment with no response mechanism in place leaves you exposed. If you're going to play the silent game, do it intentionally.

Cautionary Tale: Webflow

Webflow has a vocal, passionate user base and hundreds of reviews across Capterra and G2. From what we could find, no vendor responses on review pages.

And the consequence shows up in the reviews themselves. One Capterra reviewer wrote about being "very disappointed with the lack of support from the Webflow team" after getting no response to direct outreach. When your absence from the conversation becomes part of the conversation, that's worth paying attention to.

The Template Hall of Shame

Across the platforms, the most common bad patterns we found:

The Non-Response: "Thanks for sharing your feedback!" - acknowledges the review exists without engaging with a single thing the reviewer said. Buyers see through it instantly.

The Copy-Paste: Identical text on every review, positive or negative. Go to any platform and scroll through a product's responses - if they all start with the same sentence, the vendor is treating reviews as a checkbox.

The Argument: A vendor response longer than the review itself, point-by-point disputing every criticism. Even if you're technically right, you look defensive. The buyer reading it thinks "this company can't take feedback."

The Marketing Pivot: A review mentions a problem, and the response redirects to a sales page. "Sorry to hear about the difficulty! Have you checked out our new Enterprise tier?" reads as tone-deaf at best.

What We Don't Know

Some gaps remain that we want to be upfront about:

Dispute timelines: We don't have reliable data on how long review dispute resolution takes across platforms. Anecdotally, it varies from days to weeks.

B2B-specific response impact data: The stat about buyers being more likely to overlook negative reviews when the business responds comes from consumer research. B2B-specific data on review response impact is sparse. The logic should hold - buyers are still people - but we don't have the B2B numbers to cite.

Source Documentation

| Source | Type | Notes |

|---|---|---|

| G2 Vendor Help Center | Primary | Review management, vendor challenges |

| Capterra Vendor Portal | Primary | Response capabilities, compliance |

| TrustRadius Vendor Resources | Primary | Review policy, dispute process |

| PeerSpot Vendor Solutions | Primary | Authentication, community features |

| Trustpilot Business | Primary | Dispute process, business tools |

| Blastra: G2 vs Capterra Vendor Pricing | Internal | G2 paywall confirmation |

| Blastra: Who Owns Your Software Reviews? | Internal | Review ownership, highlighting permissions |

| Blastra: How to Get G2 & Capterra Reviews | Internal | Companion guide - generating reviews |

Key Takeaways

Only G2 and Capterra clearly support vendor responses. G2 charges for it, Capterra doesn't. Four other major platforms have no response mechanism at all. Start with Capterra.

Even on the platforms that allow it, almost nobody responds. Slack has 48 one-star reviews on Capterra with zero responses. Zendesk has 111 on G2. Pipedrive is one of the few companies we found doing this consistently - and it shows on their review pages.

Trustpilot is the platform most likely to have reviews accumulating without the vendor's knowledge. Check whether you have a page. If you do, claim it.

The dispute process exists on most platforms, but respond to disputed reviews as if they'll stay - because they might. Your response works for you either way.

Your real audience is everyone reading after the reviewer: buyers who sort by lowest rating, competitors doing research, and increasingly, AI systems that pull from these platforms when recommending software. When you respond, you're writing for all of them.

Specific beats generic. A real name at the bottom beats "The Team." And a review page where the vendor clearly shows up is worth more than a perfect star average with zero engagement.

If you need more reviews to start with, we mapped out how to get them in our guide to getting G2 and Capterra reviews.

FAQ

Which platforms let vendors respond to reviews? G2 and Capterra support public responses. Capterra is free; G2 requires a paid plan (~$2,999+/year). Trustpilot allows free responses. Gartner Peer Insights, PeerSpot, and SourceForge have no response mechanism.

How do I dispute a fake or unfair review? G2: "Submit Your Concern" on each review. Capterra: contact compliance team. TrustRadius/Gartner: contact research or support teams. PeerSpot: community policing. SourceForge: no published process.

What qualifies as a removable review? Competitor/employee reviews posing as customers, wrong-product reviews, factually false claims (not opinions), policy violations, and fabricated reviews from non-users. Negative, outdated, or score-hurting reviews are not removable.

Should I respond to positive reviews? Yes. Vendors who respond to positive reviews tend to have more reviews overall. It signals engagement and encourages future reviewers to participate.

How long should a response to a negative review be? Shorter than the review itself. Long responses appear defensive. Structure: acknowledge, own what you can, brief context, next step, sign with a name.

What if I can't respond on a platform? Use the dispute process for policy violations. For legitimate negative feedback, focus on product improvements and encouraging new reviews that reflect the current experience.

Do AI systems read review responses? Yes. AI systems pull from G2, Capterra, and SourceForge when recommending software. Thoughtful responses — especially explaining fixes to outdated complaints — provide corrective context for both buyers and AI.

Related Reading

Platform Guides

- How to Earn G2 Badges - Understanding G2's badge system and what the paid subscription gets you

- How to Earn Capterra Badges - Free badges with strong visibility

- How Trustpilot Recognition Works - The overlooked B2B platform

- How Gartner Peer Insights Works - Enterprise buyer credibility

- How to Earn TrustRadius Badges - Independent B2B alternative

- How to Earn PeerSpot Badges - Verified reviewer platform

- How to Earn SourceForge Badges - Developer-focused recognition

- How to Get G2 & Capterra Reviews - Companion guide to generating reviews

Strategy & Analysis

- Who Owns Your Software Reviews? - Review ownership and platform terms

- G2 vs Capterra Vendor Pricing Compared - Understanding what you pay for

- How AI Recommends SaaS - Why review presence matters for AI discovery

- Directory Strategy as Competitive Moat - Building defensible presence

- Software Badges Decoded - What badges actually signal